In cosmology, there is the concept of the holographic universe: the idea that a three-dimensional volume of space can be entirely described by the exposed information on its two-dimensional surface. In the context of an organization’s security posture, attack surface management is vital; a vulnerability is almost meaningless unless it is exploitable by a bad actor. The trick to determining where the vulnerable rubber meets the exposed road is in identifying what’s actually reachable, taking into account security controls, or other defenses in depth.

While attack surfaces are expanding in multiple ways, becoming more numerous and more specific, two areas of growth that merit attention are operational technology (OT) and the cloud. In the runZero Research Report, we explored how the prevalence of OT and cloud technology combined with their unique associated risks warrants a deeper look at how to navigate challenges across the attack surfaces for these types of environments.

OT & IT convergence will be the end state #

OT and industrial control systems (OT/ICS) environments are undergoing massive changes in a new era of convergence with IT devices and networks. In fact, the 2022 report from the U.S. President’s National Security Telecommunications Advisory Committee on IT-OT Convergence concludes that we must “accept that IT/OT convergence will be the end state” of IT and OT. It is evident that the merger of these historically separated networks under the general IT umbrella has created another high-value attack surface for attackers to plunder.

Historically, security was of less concern than safety and reliability for the “grade-separated” networks supporting OT/ICS devices. These networks had different dynamics, using industry-specific network protocols and abiding by maintenance schedules that were less frequent than IT systems. From an attacker’s perspective, OT systems were relatively soft targets with potentially lucrative rewards.

OT equipment has always been designed for long-term reliability and infrequent changes so many factory floors, water treatment plants, critical infrastructure, and other industrial processes use equipment that is relatively slow compared to modern PCs. OT equipment often excludes encryption and authentication at the protocol level to support real-time control requirements .

And until recently, OT was simply not IT’s problem. Improvements to networking and security technologies have changed this, allowing organizations to link their OT and IT networks (sometimes on purpose, and sometimes not). Teams that were previously responsible for securing laptops and servers are now also responsible for OT security. With mandates to improve management and monitoring efficiencies, systems that were once in a walled garden are now, at least in theory, reachable from anywhere in the world.

OT/ICS around the world #

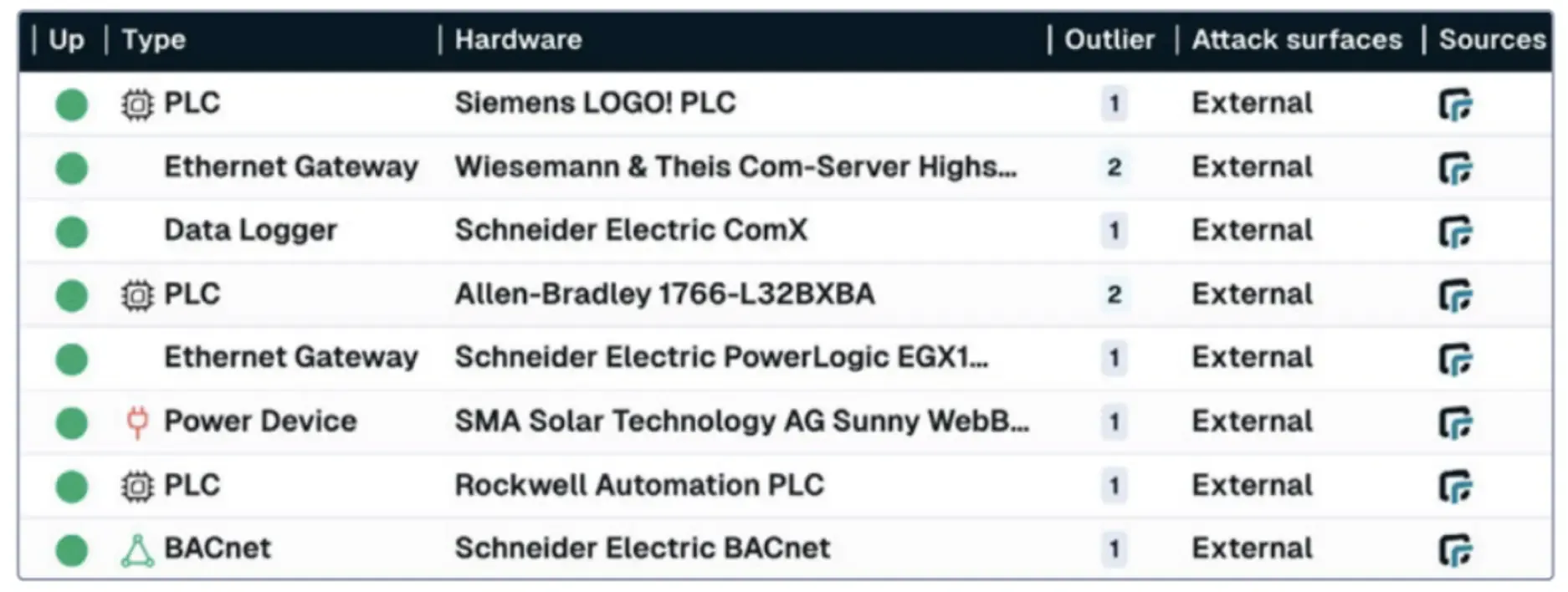

Data from the runZero research team confirms that thousands of OT/ICS devices are indeed “reachable from anywhere in the world.” These devices are prime targets for state actors and ransom seekers, as compromising them can result in industrial or utility disruption.

The number of industrial control systems directly exposed to the public Internet is terrifying. While the year-over-year increase of exposed devices has finally slowed, the total number continues to climb. Over 7% of the ICS systems in the runZero Research Report’s sample data were connected directly to the public Internet, illustrating that organizations are increasingly placing critical control systems on the public Internet.

FIGURE 1 - A selection of industrial devices detected by runZero on the public Internet.

OT Scanning: Passive becomes sometimes active #

OT devices often run industry-specific software on hardware with limited computing power. These constraints, combined with the long-term nature of OT deployments, result in an environment that does not respond well to unexpected or excessive network traffic.

Stories abound of naive security consultants accidentally shutting down a factory floor with vulnerability scans. As a result, OT engineers have developed a healthy skepticism for any asset inventory process that sends packets on the network and instead opted for vendor-specific tools and passive network monitoring. Passive monitoring works by siphoning network traffic to an out- of-band processing system that identifies devices and unexpected behavior, without creating any new communication on the network.

While passive discovery is almost entirely safe, it is also limited. By definition, passive discovery can only see the traffic that is sent, and if a device is quiet or does not send any identifying information across the network, the device may be invisible.

Passive deployments are also challenging at scale, since it’s not always possible to obtain a full copy of network traffic at every site, and much of the communication may occur between OT systems and never leave the deepest level of the network.

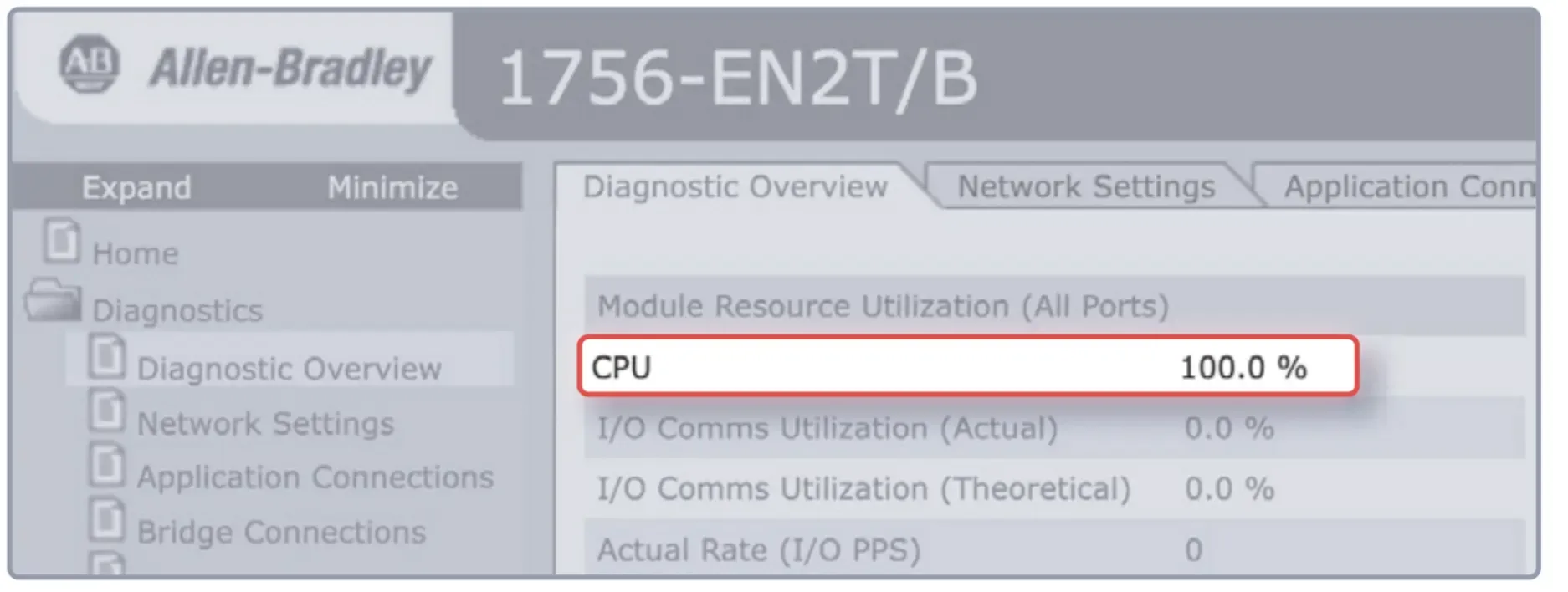

FIGURE 2 - An Allen-Bradley industrial PLC indicating 100% CPU utilization due to the device receiving a high rate of packets from an active scan NOT conducted by runZero.

Active scanning is faster, more accurate, and less expensive to deploy, but most scanning tools are not appropriate or safe to use in OT environments. Active scanning must be performed with extreme care. Large amounts of traffic, or traffic that is not typically seen by OT devices, can cause communication disruptions and even impact safety systems.

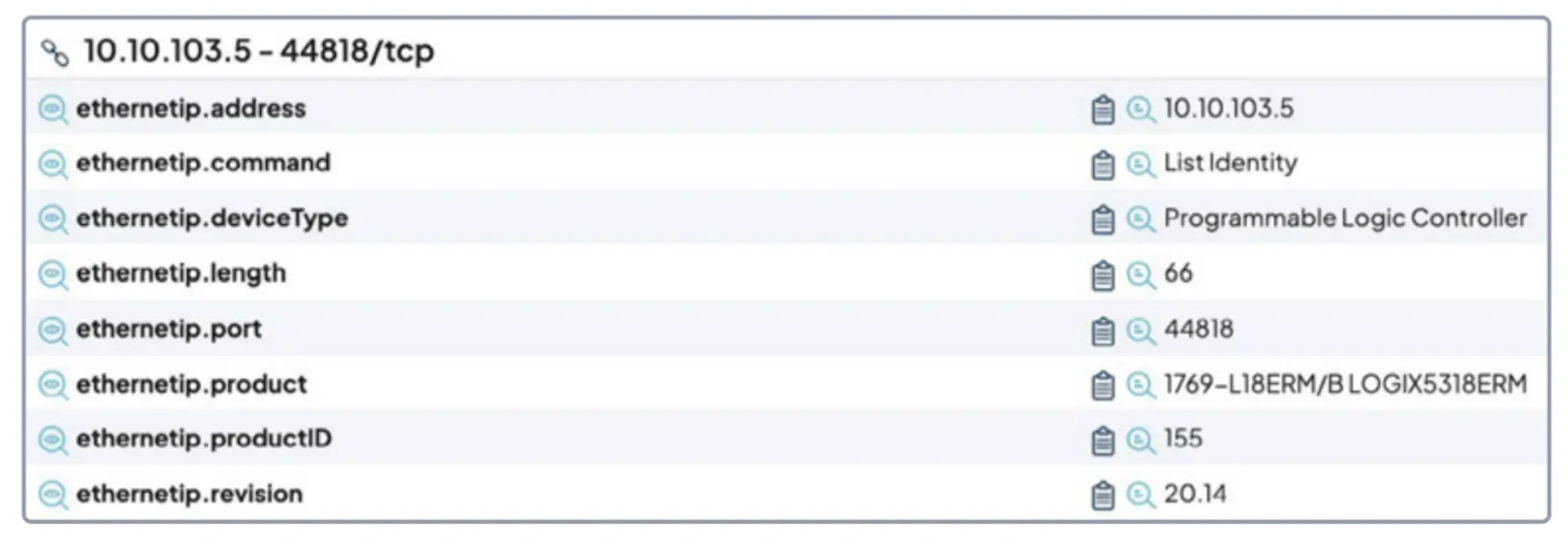

FIGURE 3 - A partial screenshot of an OT device detected by a runZero active scan.

Safe active scans #

runZero enables safe scans of fragile systems through a unique approach to active discovery. This approach adheres to three fundamental principles:

Send as little traffic as possible

Only send traffic that the device expects to see

Incrementally discover each asset to avoid methods that may be unsafe for a specific device

runZero supports tuning of traffic rates at the per-host level as well as globally across the entire task. runZero’s active scans can be configured to send as little as one packet per second to any specific endpoint, while still quickly completing scans of a large environment at a reasonable global packet rate.

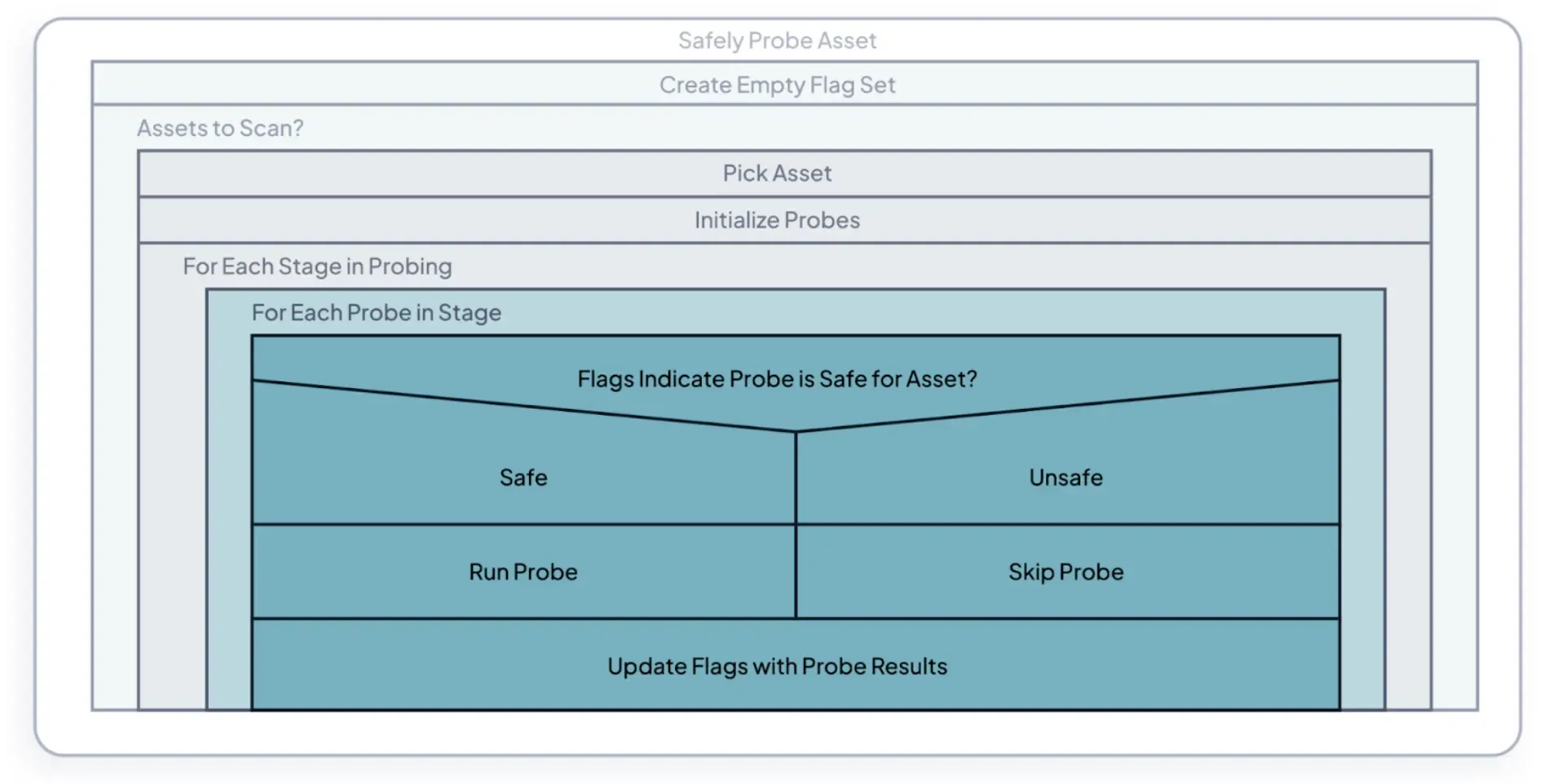

runZero is careful to send only valid traffic to discovered services and specifically avoids any communication over OT protocols that could disrupt the device. This logic is adaptive, and runZero’s active scans are customized per target through a policy of progressive enhancement.

runZero’s progress enhancement is built on a series of staged “probes.” These probes query specific protocols and applications and use the returned information to adapt the next phase of the scan for that target. The earliest probes are safest for any class of device and include ARP requests, ICMP echo requests, and some UDP discovery methods. These early probes determine the constraints for later stages of discovery, including enumeration of HTTP services and application-specific requests. The following diagram describes how this logic is applied.

FIGURE 4 - A high-level overview of the “progressive enhancement” probing process.

Lastly, runZero’s active scans also take into account shared resources within the network path. Active scans will treat all broadcast traffic as a single global host and apply the per-host rate limit to these requests. Scans that traverse layer 3 devices also actively reset the state within session-aware middle devices using a patent-pending algorithm. This combination allows runZero’s active scans to safely detect fragile devices and reduce the impact on in-path network devices as well as CPU-constrained systems within the same broadcast domain.

For those environments where active scanning is inappropriate or unavailable, runZero also supports comprehensive passive discovery through a novel traffic sampling mechanism. This sampling procedure applies runZero’s deep asset discovery logic to observed network traffic, which produces similar results to runZero’s active scanner in terms of depth and detail.

The cloud is someone else’s attack surface #

The commoditization of computing power, massive advancements in virtualization, and fast network connectivity have led to just about any form of software, hardware, or infrastructure being offered “as a service” to customers. Where companies used to run their own data centers or rent rack units in someone else’s, they can now rent fractions of a real CPU or pay for bare metal hardware on a per-minute basis.

Cloud migrations are often framed as flipping a switch, but the reality is that these efforts can take years and often result in a long-term hybrid approach that increases attack surface complexity. The result is more systems to worry about, more connectivity between systems, and greater exposure overall.

Cloud migrations #

A common approach to cloud migrations is to enumerate the on-premises environment and then rebuild that environment virtually within the cloud provider. runZero helps customers with this effort by providing the baseline inventory of the on-premises data center and making it easy to compare this with the new cloud environment. During this process, organizations may end up with more than twice as many assets, since the migration process itself often requires additional infrastructure. runZero has observed this first hand in the last five years by assisting with dozens of cloud migration projects. It is common for these projects to take longer than planned and result in more assets to manage at completion.

The migration process can be tricky, with a gradual approach requiring connectivity between the old and new environments. Shared resources such as databases, identity services, and file servers tend to be the most difficult pieces to migrate; however, they are also the most sensitive components of the environment.

The result is that many cloud environments still have direct connectivity back to the on-premises networks (and vice-versa). A compromised cloud system is often just as, if not more, catastrophic to an organization’s security situation as a compromised on-premises system.

Ultimately, the lengthy migration process can lead to increased asset exposure in the short-term due to implied bidirectional trust between the old and new environments.

New Exposures #

Cloud providers assume many of the challenges with data center management; failures at the power, network, storage, and hardware level now become the provider’s problem, but new challenges arise to take their place including unique risks that require a different set of skills to adequately address.

Cloud-hosted systems are Internet-connected by definition. While it’s possible to run isolated groups of systems in a cloud environment, cloud defaults favor extensive connectivity and unfiltered egress. Although cloud providers offer many security controls, only some of these are enabled by default, and they function differently than on-premises solutions.

Cloud-hosted systems are also vulnerable to classes of attacks that are only significant in a shared computing environment. CPU-specific vulnerabilities like Meltdown, Spectre, and Spectre v2 force cloud operators to choose between performance and security. The mitigations in place for these vulnerabilities are often bypassed. For example, the recently-disclosed

CVE-2024-2201 allows for Spectre-style data stealing attacks on modern processors, a concern in shared-hosting cloud environments.

Additionally, the ease of spinning up new virtual servers means that cloud-based inventory is now constantly in flux, often with many stale systems left in unknown states. Keeping up with dozens (or even thousands) of cloud accounts and knowing who is responsible for them becomes a problem on its own.

We analyzed systems where runZero detected end-of-life operating systems (OSs), and found that the proportions of systems running unsupported OSs are roughly the same across the cloud and external attack surfaces. This implies that the ease of upgrading cloud systems may not be as great as advertised.

Hybrid is forever #

Cloud infrastructure is here to stay, but so is on-premises computing. Any organization with a physical presence – whether retail, fast food, healthcare, or manufacturing – will require on-premises equipment and supporting infrastructure. Cloud services excel at providing highly

available central management, but a medical clinic can’t stop treating patients just because their Internet connection is temporarily offline. A hybrid model requires faster connectivity and increasingly powerful equipment to securely link on-premises and cloud environments.

Even in more simplistic environments, cloud migrations leave behind networking devices, physical security systems, printers, and file servers. All of that equipment will most likely be linked to cloud environments, whether through a VPN or over the public Internet.

Closing Thought #

As organizations increasingly rely on OT and cloud services, understanding and managing the attack surface has never been more critical. The unique vulnerabilities associated with these environments, requires proactive strategies like robust attack surface management, accurate and fast exposure management, and comprehensive asset inventory to safeguard against advanced emerging threats. Ultimately, fostering a culture that keeps pace with the threat landscape and adopts continuous improvement in security practices will be essential in navigating the complexities of OT and cloud environments.

Not a runZero customer? Download a free trial and gain complete asset inventory and attack surface visibility in minutes.