Now we’re all back at work and the holidays are a distant memory, we’ve had a chance to take stock of the traditional annual rush of end-of-year predictions and hot takes. Away from the hype, there are practical changes we’re already seeing across networks, vulnerabilities, and security operations, as discussed on the recent runZero Hour and when Tod Beardsley appeared on the TrojAI podcast, Predictions 2026: AI, Security, and the Next Wave of Tech.

What follows is what we’re expecting to see more of in 2026, from OT exposure and exploit noise to vulnerability decision-making, automation risks, and where AI actually, genuinely helps defenders. Taken together, they point to a 2026 shaped less by breakthroughs, and more by how well organizations understand their own changing environments.

As OT exposure increases, ransomware will follow #

OT environments are increasingly being managed through IT and cloud platforms for practical reasons: easier management, remote access, and operational efficiency. But that shift means more OT systems are now either directly exposed to the internet or sitting just one hop away from it.

In 2026, we predict ransomware will increasingly target OT and edge systems. Not because attackers have developed new techniques, but because these systems have become much more reachable.

What makes this especially challenging is that many OT environments were never designed with this level of connectivity in mind, and organisations often lack a clear, current understanding of how these systems are exposed.

Attackers scale noise, not capability #

Attackers won’t suddenly become more capable in 2026, but they will become much louder. AI is already being used to generate exploit code at scale, and the result is a flood of low-quality output rather than a wave of consistently effective new attacks.

Every headline vulnerability now attracts instant, AI-generated proof-of-concept exploits, often published to GitHub without testing or validation. Most don’t work. Some barely compile. But they still demand attention, forcing defenders to waste time sorting through dozens of broken PoCs to find the handful that are actually relevant.

We expect this dynamic to intensify in 2026. AI gives attackers scale, not precision, and that scale creates noise that bleeds directly into defender workflows. The real cost shows up in time and focus, not breach statistics. Teams that can quickly determine whether a vulnerability or exploit applies to their environment will cope. Teams that can’t will spend 2026 chasing ghosts.

The real vuln problem is actionability, not volume #

In 2026, we predict vulnerability management will hinge less on counting CVEs and more on deciding which ones deserve attention at all. Teams that treat every new disclosure as equally urgent will struggle. The bottleneck won’t be finding vulnerabilities: it will be determining which ones matter in a specific environment, and which can safely wait.

To date, there’s no evidence CVE quality is collapsing under the weight of AI. In 2025, around 900 CVEs were rejected, of which roughly 800 were rejected before they were ever published. Only about 100 were published and later withdrawn. That doesn’t point to a system overwhelmed by bad AI output.

What has changed is how difficult it’s become to decide what’s actionable. CVE issuance is now spread across hundreds of CNAs, not concentrated in a single authority, and vulnerability reports arrive with widely varying levels of clarity and usefulness. Some maintainers have already started rejecting AI-generated reports outright because of hallucinations and low signal. Interpretation is the key challenge.

AI helps defenders where it supports triage #

Despite the increase in noise, defenders aren’t starting from a position of weakness. AI is already proving useful in one specific area: triage. For years, security teams have been overwhelmed by alerts, vulnerability feeds, and signals competing for attention. LLMs are well suited to classification and prioritization, even when they’re imperfect, because misclassification in triage doesn’t mean something disappears: it just moves lower in the queue. (As an aside, this does contribute to the “low-priority is no-priority” binary problem, but that’s not new in vuln management.)

We expect this to become normalized in 2026. AI won’t (and certainly shouldn’t) replace analysts or make autonomous security decisions, but it will increasingly be used to sort, group, and contextualize work before a human ever looks at it. Teams that use AI to reduce alert fatigue and focus attention will cope better with rising noise, while teams that expect it to think or reason on their behalf won’t.

Automation creates new operational failures #

As more security and operational tasks are automated, new classes of mistakes will appear. Not because the technology is malicious, but because it’s being asked to act with limited context. When AI agents are given permissions and autonomy without a clear model of what “normal” behavior looks like, errors can propagate quickly.

At some point in 2026, the incident won’t start with a human clicking a phishing link, but with an AI assistant doing it on that human’s behalf. Or when one assistant convinces another to act on bad information: effectively one AI assistant phishing another.

Automation can remove friction, but it can also remove pauses and checks. Without clear boundaries and visibility into what automated systems are doing, mistakes travel faster than people can intervene.

A new AI-created vulnerability class emerges #

2026 may be the year a genuinely new class of software vulnerability appears; one that isn’t just an evolution of existing bug types. This won’t come from AI writing obviously broken code, but from AI producing solutions that work, are adopted, and spread before anyone fully understands how or why they’re risky. The issue won’t be that the code fails; it’s that it will succeed in ways people don’t reason about naturally.

The underlying concern is that models don’t think like programmers. They don’t reason in the same abstractions, assumptions, or constraints, and that difference matters. When AI-generated patterns are reused widely — because they solve real problems efficiently — flaws can propagate at scale before they’re recognized as vulnerabilities at all.

Our prediction isn’t that this will happen someday in the distant future. It’s that 2026 is when the industry will first have to confront vulnerabilities that exist specifically because of how AI systems reason, not because of familiar programming mistakes.

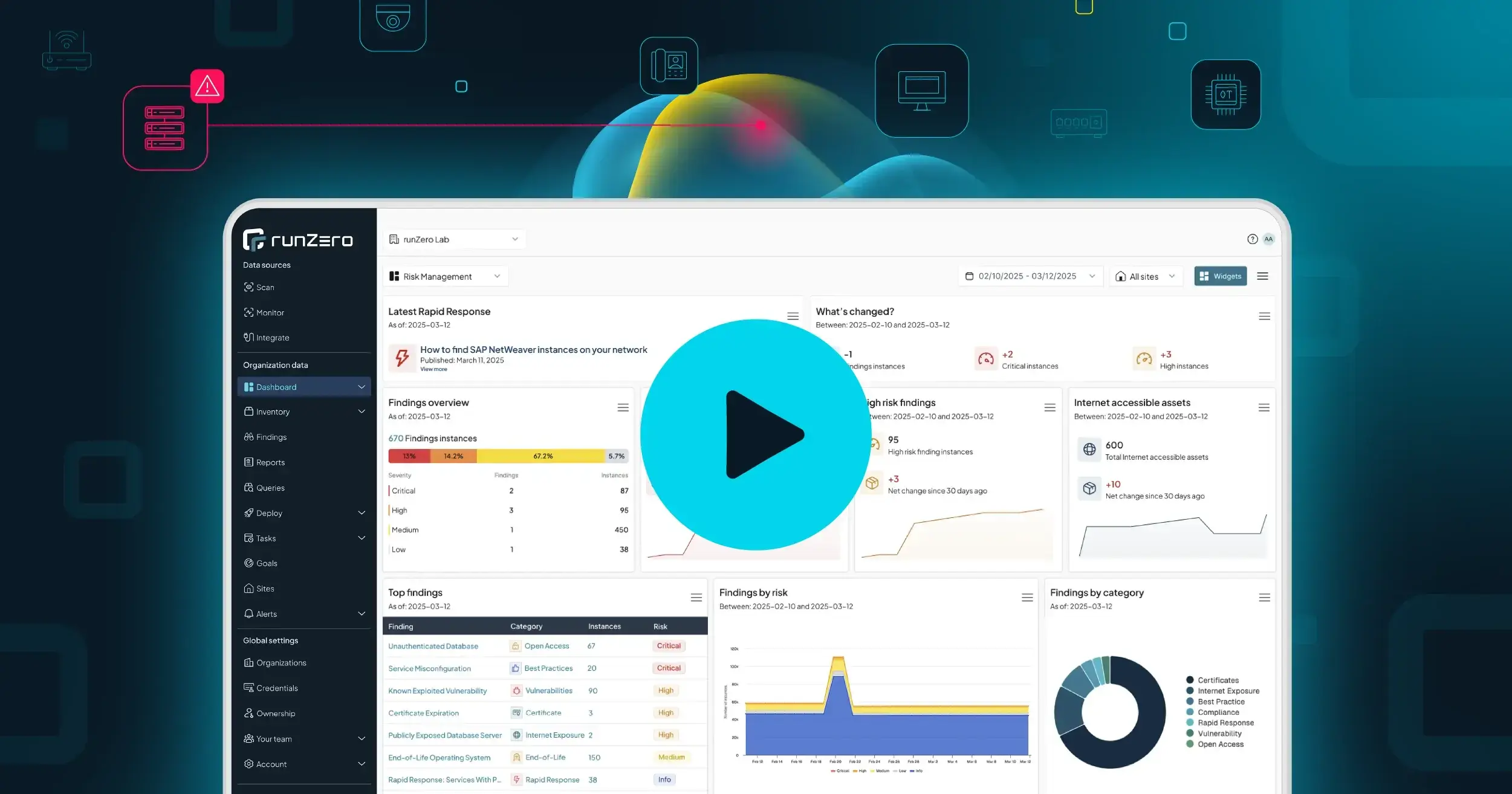

For the full discussion of these — complete with context, caveats, and detours — plus a look back on 2025's highs and lows, watch to the latest runZero Hour.